"""

cost minimize하는 것이 목표

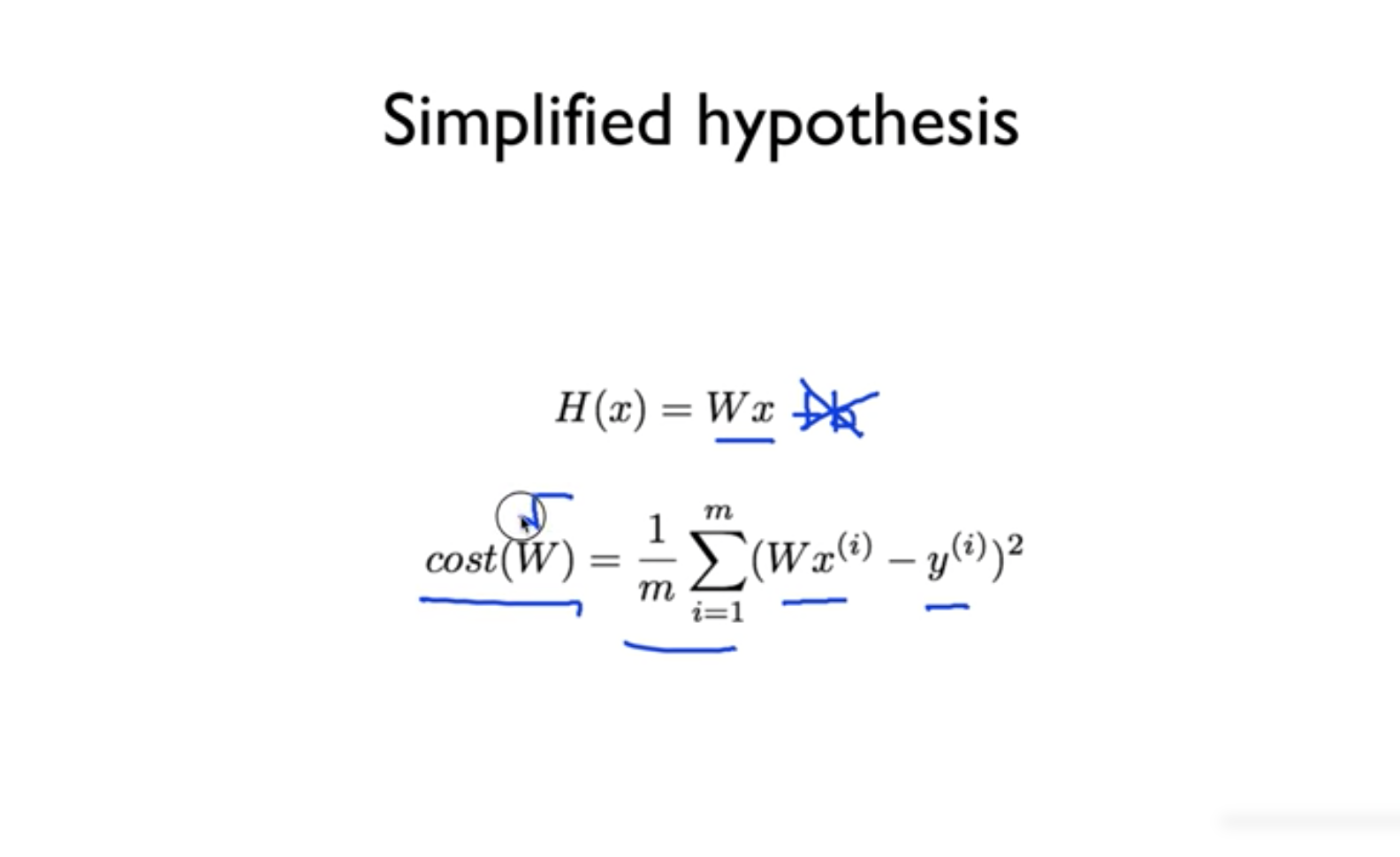

simplified hypotesis H(x) = wx

cost W = 1/m E (w - y)^ 2

what cost(w) looks like?

(1,1) (2,2) (3,3)

if w = 1, cost = 0 = 1/3{(1-1)^2 + (2-2)^2 + (3-3)^2}

if w = 0 cost = 4.67 = 1/3{(0-1)^2 + (0-2)^2 + (0-3)^2}

if w = 2 cost = 4.67

y 축을 cost function x축은 w 라고 설정하고 그림을 그려봤더니

x^2 같이 생긴 이차함수로 나온다.

w = 1 일 때 가장 작은 Cost 값을 가짐

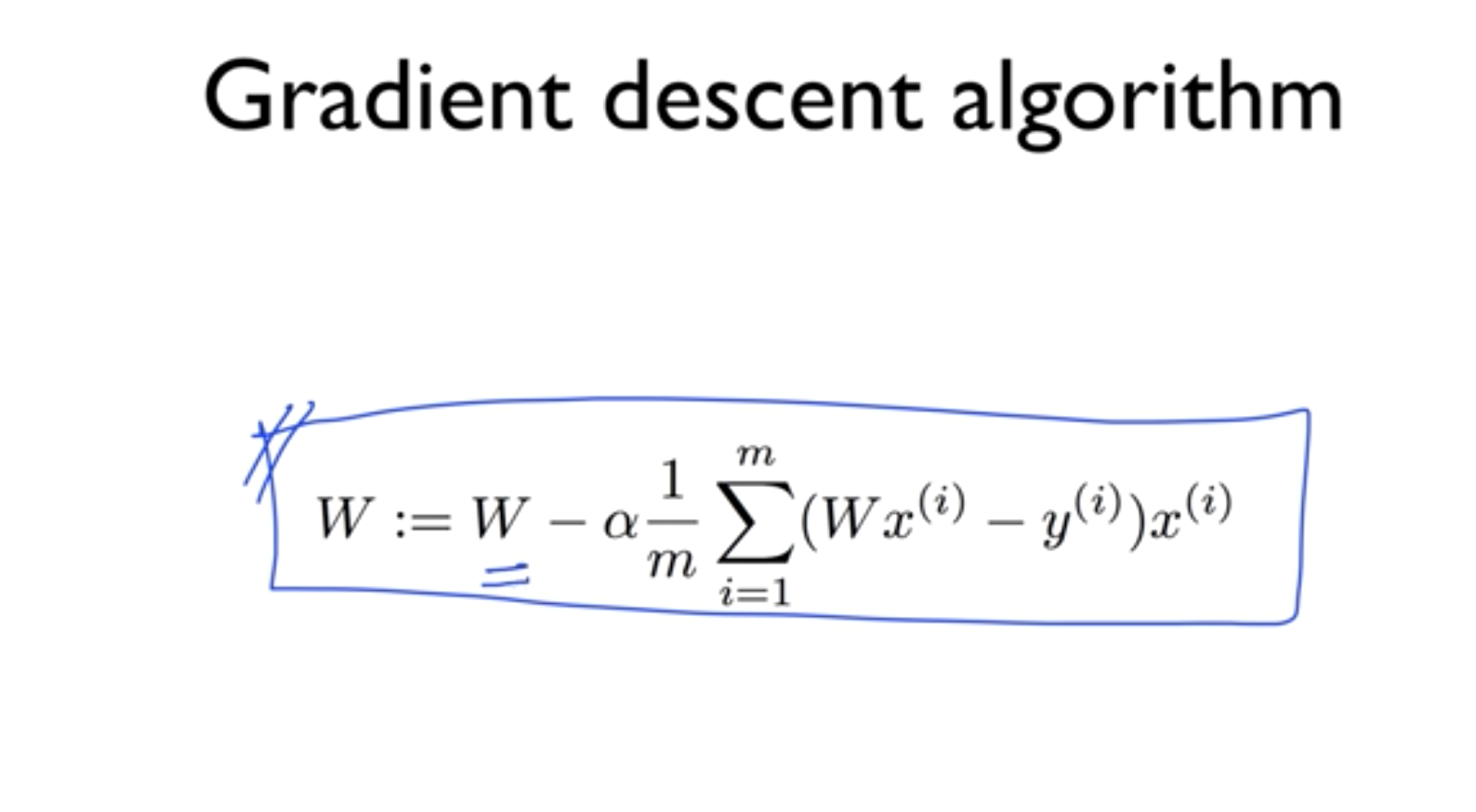

이 그래프를 gradient descent algortihm 경사 내려가는 알고리즘

- minimize cost function

- gradient descent is used many minimization problems

- for a given cost function cost(w, b) it will find w, b to minimize cost

- it can be aplied to more general function : cost w1, w2 ...

how it works?

1. start with initial guesses (start at 0 )

2. keep changing w and reduce cost(w, b)

3. each time you change the parameters you select the gradient which reduces

cost the most possible

4. repeat

5. do so until you converge to a local minimum

6. has an interesting property - where you start can determine which minimum you end up

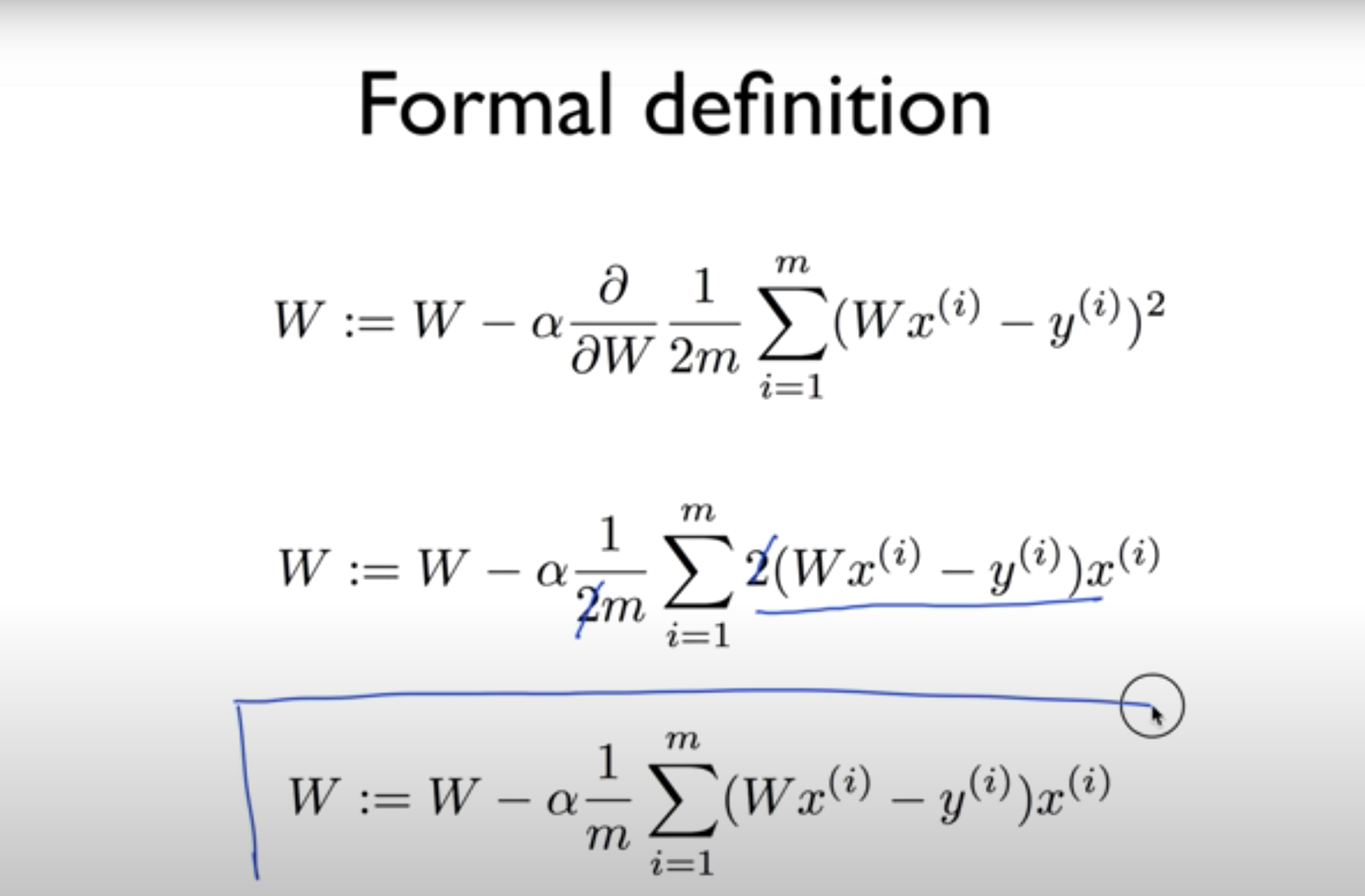

formal definition

costW = 1/2m E (w - y) ^ 2

w:w - alpha * cost미분한거

formal definition

자동으로 cost function 했던 값이 된다.

그래서 이 수식이 나오게 된다.

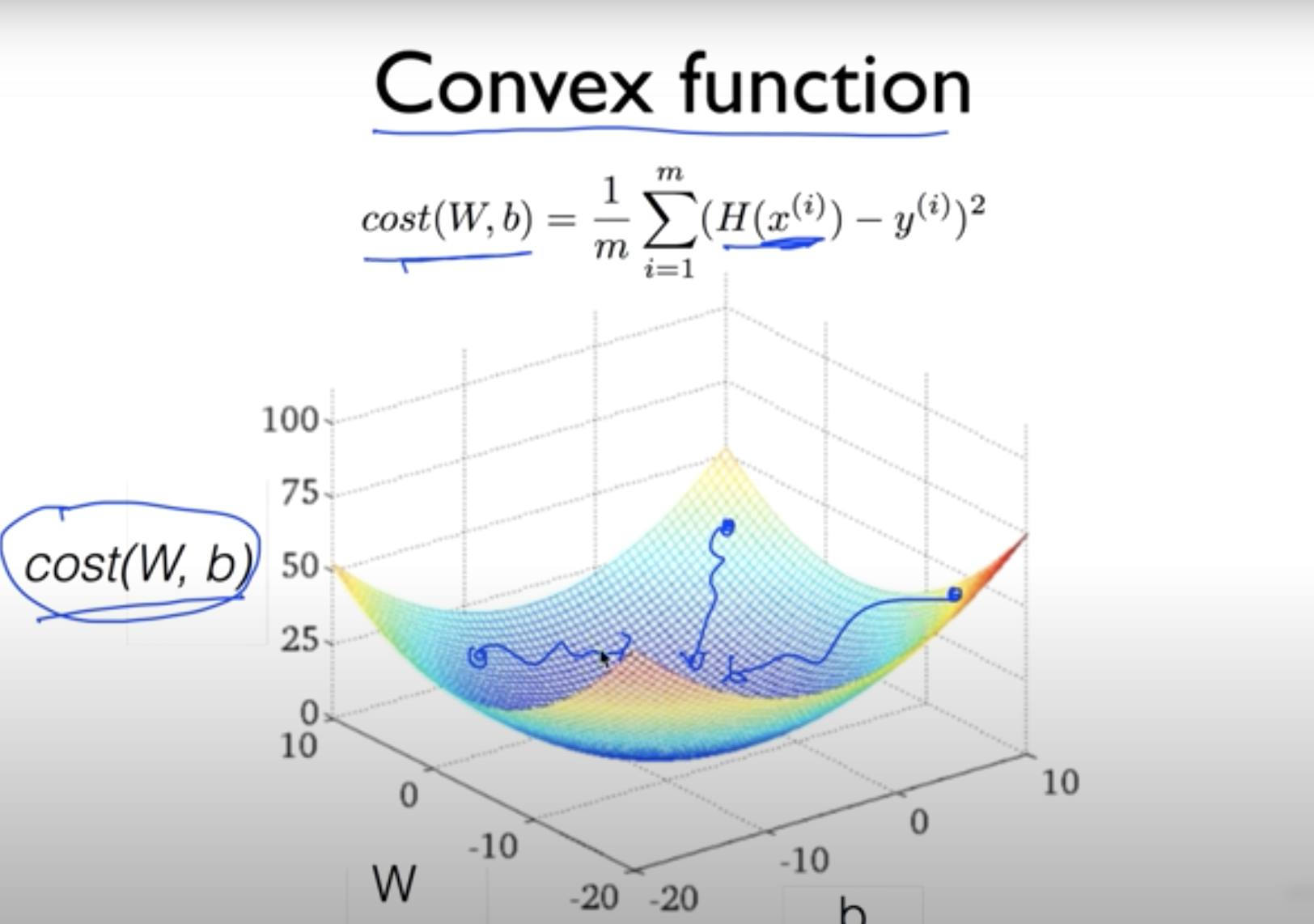

만약 울퉁불퉁한 언덕이 나온다.

시작점을 어떻게 설정하느냐 에 따라 달라진다.

우리 현재 가지고 있는 h(x)를 이용하면 어느 시작점이든 같은 지점에 도달. 답을 반드시 찾는다.

그래서 convex function 형태인지 확인해야 한다.

"""

소스 코드는 이 깃헙에서 다운 받을 수 있다.

강의 자체가 4년 전꺼라

tensorflow 1.0 버전을 기반으로 하고 있어 강의 내용에서 다루는 실제 코드는 offdate이다.

물론 import tensorflow.compat.v1 as tf

을 써서 할 수도 있다.

# Lab 3 Minimizing Cost

import tensorflow as tf

import matplotlib.pyplot as plt

X = [1, 2, 3]

Y = [1, 2, 3]

W = tf.placeholder(tf.float32)

# Our hypothesis for linear model X * W

hypothesis = X * W

# cost/loss function

cost = tf.reduce_mean(tf.square(hypothesis - Y))

# Variables for plotting cost function

W_history = []

cost_history = []

# Launch the graph in a session.

with tf.Session() as sess:

for i in range(-30, 50):

curr_W = i * 0.1

curr_cost = sess.run(cost, feed_dict={W: curr_W})

W_history.append(curr_W)

cost_history.append(curr_cost)

# Show the cost function

plt.plot(W_history, cost_history)

plt.show()--------------------------------------------------------------------------- AttributeError Traceback (most recent call last) <ipython-input-1-700abf8b37f3> in <module>()

6 Y = [1, 2, 3]

7

8 W = tf.placeholder(tf.float32)

9

10 # Our hypothesis for linear model X * W

AttributeError: module 'tensorflow' has no attribute 'placeholder'

현재 버전 tensorflow는 이제 place holder가 없다.

github.com/hunkim/DeepLearningZeroToAll/blob/master/tf2/tf2-03-1-minimizing_cost_show_graph.py

hunkim/DeepLearningZeroToAll

TensorFlow Basic Tutorial Labs. Contribute to hunkim/DeepLearningZeroToAll development by creating an account on GitHub.

github.com

# Lab 3 Minimizing Cost

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

x_train = [1, 2, 3, 4]

y_train = [0, -1, -2, -3]

tf.model = tf.keras.Sequential()

tf.model.add(tf.keras.layers.Dense(units=1, input_dim=1))

sgd = tf.keras.optimizers.SGD(lr=0.1)

tf.model.compile(loss='mse', optimizer=sgd)

tf.model.summary()

# fit() trains the model and returns history of train

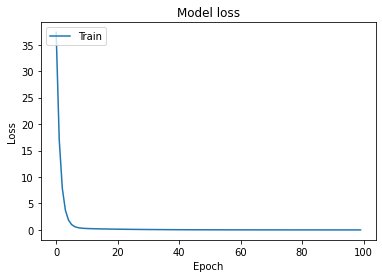

history = tf.model.fit(x_train, y_train, epochs=100)

y_predict = tf.model.predict(np.array([5, 4]))

print(y_predict)

# Plot training & validation loss values

plt.plot(history.history['loss'])

plt.title('Model loss')

plt.ylabel('Loss')

plt.xlabel('Epoch')

plt.legend(['Train', 'Test'], loc='upper left')

plt.show()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 1) 2

=================================================================

Total params: 2

Trainable params: 2

Non-trainable params: 0

_________________________________________________________________

Epoch 1/100

1/1 [==============================] - 1s 536ms/step - loss: 37.3459

Epoch 2/100

1/1 [==============================] - 0s 3ms/step - loss: 16.9986

Epoch 3/100

1/1 [==============================] - 0s 4ms/step - loss: 7.8495

Epoch 4/100

1/1 [==============================] - 0s 3ms/step - loss: 3.7288

Epoch 5/100

...

Epoch 97/100

1/1 [==============================] - 0s 3ms/step - loss: 0.0014

Epoch 98/100

1/1 [==============================] - 0s 4ms/step - loss: 0.0013

Epoch 99/100

1/1 [==============================] - 0s 4ms/step - loss: 0.0012

Epoch 100/100

1/1 [==============================] - 0s 4ms/step - loss: 0.0011

[[-3.9437876]

[-2.971077 ]]

[[-3.9437876] [-2.971077 ]]

'머신러닝,딥러닝 > tensorflow' 카테고리의 다른 글

| lec08 deep neural network for everyone (0) | 2021.01.22 |

|---|---|

| lec07 learning rate, data preprocessing overfitting (0) | 2021.01.21 |

| lec06 multinominal 개념 소개 (0) | 2021.01.21 |

| lec05 logistic (regression) classification (0) | 2021.01.19 |

| lec04 file읽어서 tf.model에 집어넣기 (0) | 2021.01.19 |

| lec04 multi-variable linear regression tensorflow (0) | 2021.01.18 |

| tensorflow lec 02 linear (0) | 2021.01.17 |

| tensorflow 잘 안됨 Import tensorflow.compat.v1 as tf (0) | 2021.01.17 |